I’m always interested in finding new music to add to the collection. Finding a new band can be an enjoyable (but time consuming) process. There’s a few avenues I use in my search:  The  “Discovr music” app is quite useful and will show similar bands as “spokes” jutting out from the band name searched on. Another is viewing the ABC “Rage” Playlist, which is a chronological listing of the songs they’ll be playing for that night. (I’m too old to be staying up watching Rage nowadays)

I’ve discovered some great non-mainstream stuff from this. Charles Bradley, Fleet Foxes, Tame Impala, PopStrangers (better than their name suggests), Real Estate, City and Color to name a few. There’s a lot of trash to sift through to find the few nuggets of gold and unfortunately the playlist in its current format makes it difficult to find the corresponding YouTube video. The default way required selecting the band and song name by mouse and using a Chrome plugin to right click and search through to Youtube. It was time consuming and finicky.

I wrote a Python script to a) solve the problem and b) learn a bit of XPath which I’d never used before. Previously I would have done this using a bunch of regular expressions that would scrape to file. The file would scan over the text and ‘open the gate’ when hitting the appropriate tag and turn off when finding the closing tag. Everything in-between the “gates” would be written to a file. I thought I’d try a different approach this time.

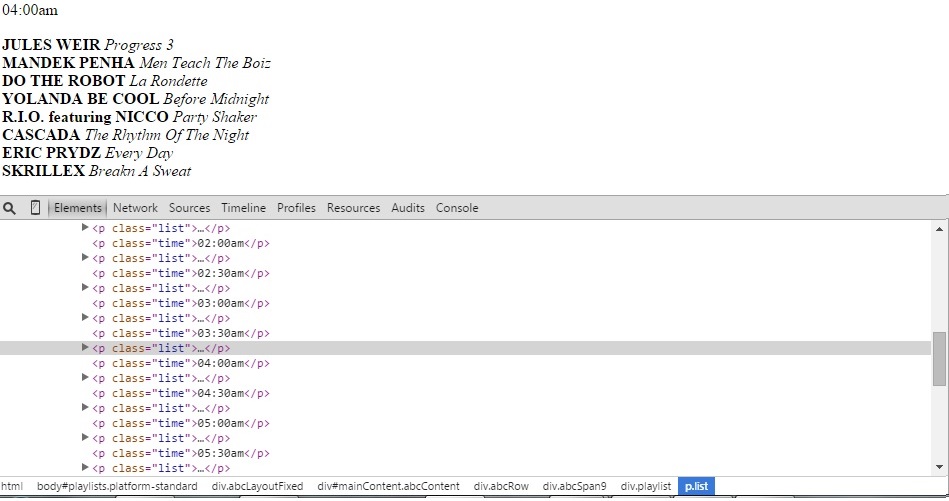

XPath will allow a structured document (HTML in this case) to be probed by following the tag hierarchy to the desired data. First things first, download the playlist and examine the file. To examine the contents I used Chrome “Inspect Element”. This way I could trace the path from the root node <HTML> to the element that contained the playlist.

The graphic below shows the output of “Inspect Element”. The top section is the rendered text, as would be viewed by the user. Middle contains a fragment of the HTML. Bottom section contains the tag hierarchy. This tag hierarchy is what I’ll be using to guide the Python program to the correct data to be scraped.

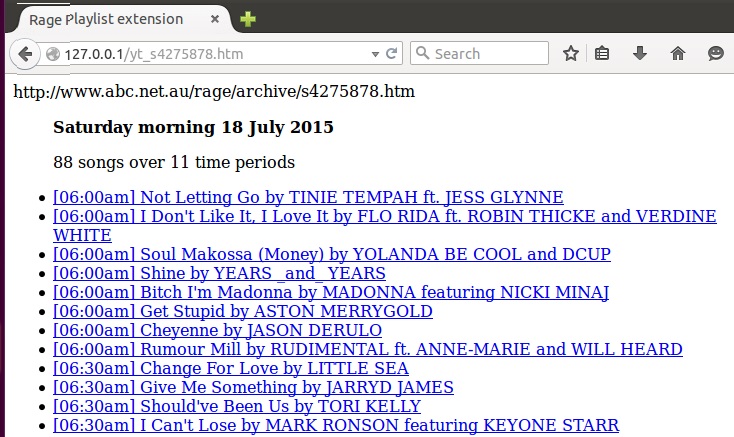

The Python program will be quick and dirty way to use XPath to render to an HTML. It won’t be invoked as a CGI-BIN program, just execute the program on the command line and place the resulting file on the local web server . Python needs to have the lxml module installed. My version of Python has this installed already.

The important part is highlighted in red. It shows the path to the elements needed. When needing to choose a particular element, it can be selected by using it’s attributes. This will be seen as [@attr=”val”]. If the text is required, we can use the text() function to return it, but it’s not used here.

Code is as follows

[code]

#!/usr/bin/python

import  sys, re,  requests

from lxml import html

def main():

if len(sys.argv) > 1:

URL=str(sys.argv[1])

print str(sys.argv[1])

print “<html>”

print “<head><title>Rage Playlist extension</title></head>”

print “<body>”

print “<ul>”

artist_list = []

song_list = []

time_list = []

song_artist_time_list = []

if len(sys.argv)>1:

page = requests.get(str(sys.argv[1]))

else:

page = requests.get(‘http://www.alphastar.net.au/rage/rage.html’)

rendered = page.text.encode(‘ascii’,’ignore’)

getDate(rendered)

tree = html.fromstring(rendered)

for playlistTag in tree.xpath(‘//html/body//div[@class=”abcLayoutFixed”]/div[@id=”mainContent”]/div[@class=”abcRow”]

/div[@class=”abcSpan9″]/div[@class=”playlist”]’):

timeTag = playlistTag.xpath(‘//p[@class=”time”]’)

for t1 in timeTag:

time_list.append(t1.text)

listTag = playlistTag.xpath(‘//p[@class=”list”]’)

timeCtr = 0

for lt in listTag:

artistTag=lt.xpath(‘strong’)

for el1 in artistTag:

artist_list.append(el1.text)

songTag=lt.xpath(’em’)

for el2 in songTag:

song_list.append(el2.text)

song_artist_time_list.append(timeCtr)

timeCtr = timeCtr + 1

checkLengths(artist_list, song_list)

if (len(artist_list) <> len(song_list)):

print “trouble”

exit(1)

else:

pass

#print “song and artist list match up. As we expect :)”

print str(len(song_artist_time_list))+” songs over”

print str(len(time_list))+ ” time periods”

print “<P>”

#exit(1)

for x in range (0,len(artist_list)):

print “<li>”

print “<a href=’http://www.google.com/search?btnI=I&q=youtube+”

print(artist_list[x]+song_list[x])

print “‘>[“+time_list[song_artist_time_list[x]]+”]”+song_list[x]+” by “+artist_list[x]+”</a>”

print “</li>”

print “</ul>”

print “</body>”

def getDate(pageText):

#print pageText

x = re.search(“<p.*date.*p>”,pageText)

print “<b>”+x.group(0)+”</b>”

def checkLengths(artist_list, song_list):

if (len(artist_list) <> len(song_list)):

print “trouble”

exit(1)

else:

pass

#print “song and artist list match up. As we expect :)”

if __name__ == “__main__”:

main()

[/code]

The code is simple. The main thing I wanted to expose was processing via XPath. Notice that the XPath root stops at <p class=”list”>. This is a container level element that contains the data we need. The artist is under the <strong> element, and the title of the song is in <em> element.

So now we can iterate over these multiple <p class=”list”> tags (there’s 13 of them). Simply printing them out won’t suffice as we won’t be able to pair artists and songs, so I’ve created two arrays so that each can be paired together and accessed with a common index. A better way would be to have a an array which points to an object containing two values which would do away with 2 arrays, but I’m keeping it simple for now.

Once the document is parsed, a number of checks are done to make sure that both arrays are of equal length. If yes, good to go. Now we just need to add some HTML decorations around the scraped data for presentation. One way to make things easier is to simulate Google “I’m Feeling Lucky” with the additional code of “&BTNI=I”. This will not show the google page and go to the first result which should be the youtube video.

The page output is very simple HTML, now with clickable links to the YouTube videos.